In grouper 2.4 patch #47, Grouper can sync database tables. This is intended for these use cases:

- Sync memberships to their own table for performance reasons (when you are doing reporting and have a lot of joins and subselects, a table without lots of joins can help a lot)

- Sync memberships to another database. Other applications might not want to depend on Grouper at runtime, or have other performance reasons

- Sync a subject table from another database to Grouper. This might be for performance or availability reasons

- Sync some table to another table. This function is generic so use it for whatever you want (groups, attributes, etc)

The tables in the "from" and "to" need to exist and the right columns and column types need to be there. In addition you cannot name columns which are keywords in our database.

Currently this only works in full sync mode. Real time incremental updates will be added soon.

Note, you can sync across database vendors as well (e.g. bring subjects from Oracle to MySQL).

Example: sync memberships to a table in the Grouper database or another database

- Make a view with the data you want to sync

- This can be based on some ad hoc groups, folders, or attributes on groups

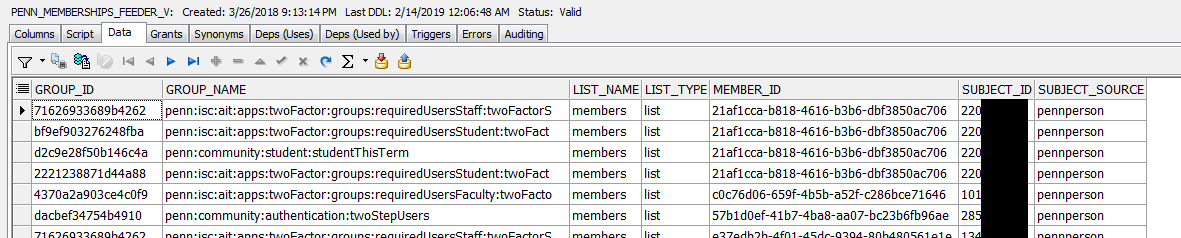

- For instance this could be the view

This view is defined (oracle) as:

/* Formatted on 5/1/2019 11:27:03 AM (QP5 v5.252.13127.32847) */ CREATE OR REPLACE FORCE VIEW PENN_MEMBERSHIPS_FEEDER_V ( GROUP_ID, GROUP_NAME,LIST_NAME, LIST_TYPE, MEMBER_ID, SUBJECT_ID, SUBJECT_SOURCE ) BEQUEATH DEFINER AS SELECT GROUP_ID, group_name, list_name, GMLV.LIST_TYPE, GMLV.MEMBER_ID, GMLV.SUBJECT_ID, GMLV.SUBJECT_SOURCE FROM grouper_memberships_lw_v gmlv WHERE ( GMLV.GROUP_NAME IN ('penn:community:student:studentThisTerm', 'penn:community:student:degreePursual:degreePursual_LLM', ... ) OR gmlv.group_name LIKE 'penn:isc:ait:apps:atlassian:%') AND GMLV.SUBJECT_SOURCE = 'pennperson' AND list_type = 'list';Configure databases in grouper.client.properties. Note, the "id" of the config group is the part of the config that ties them together, e.g. "pcom" or "awsDev"

grouperClient.jdbc.pcom.driver = oracle.jdbc.driver.OracleDriver grouperClient.jdbc.pcom.url = jdbc:oracle:thin:@(DESCRIPTION = (ADDRESS = (PROTOCOL = TCP)(HOST = ... grouperClient.jdbc.pcom.user = myuser grouperClient.jdbc.pcom.pass = ****** grouperClient.jdbc.awsDev.driver = org.postgresql.Driver grouperClient.jdbc.awsDev.url = jdbc:postgresql://penn ... grouperClient.jdbc.awsDev.user = anotheruser grouperClient.jdbc.awsDev.pass = ******

Note, you can use the grouper database like this (in grouper.client.properties)

grouperClient.jdbc.grouper.driver.elConfig = ${edu.internet2.middleware.grouper.cfg.GrouperHibernateConfig.retrieveConfig().propertyValueString("hibernate.connection.driver_class")} grouperClient.jdbc.grouper.url.elConfig = ${edu.internet2.middleware.grouper.cfg.GrouperHibernateConfig.retrieveConfig().propertyValueString("hibernate.connection.url")} grouperClient.jdbc.grouper.user.elConfig = ${edu.internet2.middleware.grouper.cfg.GrouperHibernateConfig.retrieveConfig().propertyValueString("hibernate.connection.username")} grouperClient.jdbc.grouper.pass.elConfig = ${edu.internet2.middleware.grouper.cfg.GrouperHibernateConfig.retrieveConfig().propertyValueString("hibernate.connection.password")}You can do a loader config database like this:

grouperClient.jdbc.pcom2.driver.elConfig = ${edu.internet2.middleware.grouper.app.loader.GrouperLoaderConfig.retrieveConfig().propertyValueString("db.pcom2.driver")} grouperClient.jdbc.pcom2.url.elConfig = ${edu.internet2.middleware.grouper.app.loader.GrouperLoaderConfig.retrieveConfig().propertyValueString("db.pcom2.url")} grouperClient.jdbc.pcom2.user.elConfig = ${edu.internet2.middleware.grouper.app.loader.GrouperLoaderConfig.retrieveConfig().propertyValueString("db.pcom2.user")} grouperClient.jdbc.pcom2.pass.elConfig = ${edu.internet2.middleware.grouper.app.loader.GrouperLoaderConfig.retrieveConfig().propertyValueString("db.pcom2.pass")}Configure the table sync in grouper.client.properties

This example settings

# grouper client database key where copying data from # {valueType: "string"} grouperClient.syncTable.memberships.databaseFrom = pcom # table or view where copying data from # {valueType: "string"} grouperClient.syncTable.memberships.tableFrom = PENN_MEMBERSHIPS_FEEDER_V # grouper client database key where copying data to # {valueType: "string"} grouperClient.syncTable.memberships.databaseTo = awsDev # table or view where copying data to # {valueType: "string"} grouperClient.syncTable.memberships.tableTo = PENN_MEMBERSHIPS_TEMP # columns must match in from and to tables, you can specify columns or do all with an asterisk # {valueType: "string"} grouperClient.syncTable.memberships.columns = * # if there is a primary key, list it, else list the composite keys # {valueType: "string"} grouperClient.syncTable.memberships.primaryKeyColumns = group_id, list_name, member_id # the grouping column is what is uniquely selected, and then batched through to get data. # {valueType: "string"} grouperClient.syncTable.memberships.groupingColumn = group_idSetup an other job to run this in grouper-loader.properties

# Object Type Job class # {valueType: "class", mustExtendClass: "edu.internet2.middleware.grouper.app.loader.OtherJobBase", mustImplementInterface: "org.quartz.Job"} otherJob.membershipSync.class = edu.internet2.middleware.grouper.app.tableSync.TableSyncOtherJob # Object Type Job cron # {valueType: "string"} otherJob.membershipSync.quartzCron = 0 0/30 * * * ? # this is the key in the grouper.client.properties that represents this job otherJob.membershipSync.grouperClientTableSyncConfigKey = memberships # fullSyncFull, fullSyncGroupings, fullSyncChangeFlag, incrementalAllColumns, incrementalPrimaryKey # {valueType: "string"} otherJob.membershipSync.syncType = fullSyncFull

Performance

The performance will be limited by how many changes there are and how quickly it can get the data on both sides.

From a local mysql to a local mysql, if there are 25000 rows and 25000 inserts, it takes 2 minutes. If there are 25000 rows and no updates, it takes 0.3 seconds.

This will select data in batches, insert/update/delete in batches.

Configuration

All settings defaults (grouper.client.properties)

################################

## Sync database table settings

################################

# the grouping column is what is uniquely selected, and then batched through to get data, default across all sql jobs

# {valueType: "integer"}

# grouperClient.syncTableDefault.groupingSize = 10000

# size of jdbc batches, default across all sql jobs

# {valueType: "integer"}

# grouperClient.syncTableDefault.batchSize = 800

# number of bind vars in select, default across all sql jobs

# {valueType: "integer"}

# grouperClient.syncTableDefault.maxBindVarsInSelect = 900

# default database for all sql jobs for status tabls (e.g. grouper_sync*)

# {valueType: "string"}

# grouperClient.syncTableDefault.statusDatabase = grouper

# the grouping column is what is uniquely selected, and then batched through to get data, default across all sql jobs

# {valueType: "integer"}

# grouperClient.syncTableDefault.groupingSize = 10000

# default switch from incremental to full if the number of incrementals is over this threshold

# {valueType: "integer"}

# grouperClient.syncTableDefault.switchFromIncrementalToFullIfOverRecords = 300000

# switch from incremental to full if the number of incrementals is over the threshold, this is full sync to switch to

# fullSyncChangeFlag, fullSyncFull, fullSyncGroups

# {valueType: "string"}

# grouperClient.syncTableDefault.switchFromIncrementalToFullSubtype = fullSyncFull

# switch from incremental to group (if theres a grouping col) if the number of incrementals for a certain group

# {valueType: "integer"}

# grouperClient.syncTableDefault.switchFromIncrementalToGroupIfOverRecordsInGroup = 50000

# switch from incremental to full if the number of groups (and records over threshold) is over this threshold

# i.e. needs to be over 100 groups and over 300000 records

# {valueType: "integer"}

# grouperClient.syncTableDefault.switchFromIncrementalToFullIfOverGroupCount = 100

All settings specific to provisioner (in this case "personSource" is a variable for the provisioner (grouper.client.properties)

# grouper client or loader database key where copying data from

# the 3rd part is the sync_id. in this case "personSource". Defaults to "grouper"

# {valueType: "string"}

#grouperClient.syncTable.personSource.databaseFrom =

# table or view where copying data from, include the schema if needed

# {valueType: "string"}

#grouperClient.syncTable.personSource.tableFrom =

# grouper client or loader database key where copying data to

# {valueType: "string"}

#grouperClient.syncTable.personSource.databaseTo =

# grouper client or loader database key (readonly) if large queries should be performed against a different database

# {valueType: "string"}

#grouperClient.syncTable.personSource.databaseToReadonly =

# table or view where copying data to, include the schema if needed

# {valueType: "string"}

#grouperClient.syncTable.personSource.tableTo = PERSON_SOURCE_TEMP

# columns must match in from and to tables, you can specify columns or do all with an asterisk

# {valueType: "string"}

#grouperClient.syncTable.personSource.columns = *

# if there is a primary key, list it, else list the composite keys. note, this doesnt

# have to literally be the database prmiary key, just need to be a unique col(s) in table

# {valueType: "string"}

# grouperClient.syncTable.personSource.primaryKeyColumns = penn_id

# if doing fullSyncChangeFlag (look for a col that says if the rows are equal, e.g. a timestamp or a checksum)

# {valueType: "string"}

# grouperClient.syncTable.personSource.changeFlagColumn = check_sum

# the grouping column is what is uniquely selected, and then batched through to get data. Optional.

# for groups this should be the group uuid

# {valueType: "string"}

# grouperClient.syncTable.personSource.groupingColumn = penn_id

# the grouping column is what is uniquely selected, and then batched through to get data, defaults to global setting

# {valueType: "integer"}

# grouperClient.syncTable.personSource.groupingSize = 10000

# size of jdbc batches

# {valueType: "integer"}

# grouperClient.syncTable.personSource.batchSize = 800

# number of bind vars in select

# {valueType: "integer"}

# grouperClient.syncTable.personSource.maxBindVarsInSelect = 900

# switch from incremental to full if the number of incrementals is over this threshold

# if this is less than 0, then it will not switch from incremental to full

# {valueType: "integer"}

# grouperClient.syncTable.personSource.switchFromIncrementalToFullIfOverRecords = 300000

# switch from incremental to full if the number of incrementals is over the threshold, this is full sync to switch to

# fullSyncChangeFlag, fullSyncFull, fullSyncGroups

# {valueType: "string"}

# grouperClient.syncTable.personSource.switchFromIncrementalToFullSubtype = fullSyncFull

# switch from incremental to group (if theres a grouping col) if the number of incrementals for a certain group

# if this is less than 0, then it will not switch from incremental to group

# {valueType: "integer"}

# grouperClient.syncTable.personSource.switchFromIncrementalToGroupIfOverRecordsInGroup = 50000

# switch from incremental to full if the number of groups (and records over threshold) is over this threshold

# i.e. needs to be over 100 groups and over 300000 records

# {valueType: "integer"}

# grouperClient.syncTable.personSource.switchFromIncrementalToFullIfOverGroupCount = 100

# if querying a real time table, this is the table, needs to have primary key columns.

# each record will check the source and destination and see what to do

# {valueType: "string"}

# grouperClient.syncTable.personSource.incrementalPrimaryKeyTable = real_time_table

# name of a column that has a sequence or last updated date.

# must be in the incrementalPrimaryKeyTable if incremental primary key sync

# {valueType: "string"}

# grouperClient.syncTable.personSource.incrementalProgressColumn = last_updated

# name of a column that has a sequence or last updated date.

# must be in the main data table if incremental all columns

# {valueType: "string"}

# grouperClient.syncTable.personSource.incrementalAllColumnsColumn = last_updated

# database where status table is. defaults to "grouper"

# {valueType: "string"}

# grouperClient.syncTable.personSource.statusDatabase = grouper

grouper-loader.properties to schedule jobs

################################

## Table sync jobs

## tableSync jobs should use class: edu.internet2.middleware.grouper.app.tableSync.TableSyncOtherJob

## and include a setting to point to the grouperClient config, if not same: otherJob.<otherJobName>.grouperClientTableSyncConfigKey = key

## this is the subtype of job to run: otherJob.<otherJobName>.syncType = fullSyncFull

## (can be: fullSyncFull, fullSyncGroupings, fullSyncChangeFlag, incrementalAllColumns, incrementalPrimaryKey)

################################

# Object Type Job class

# {valueType: "class", mustExtendClass: "edu.internet2.middleware.grouper.app.loader.OtherJobBase", mustImplementInterface: "org.quartz.Job"}

# otherJob.membershipSync.class = edu.internet2.middleware.grouper.app.tableSync.TableSyncOtherJob

# Object Type Job cron

# {valueType: "string"}

# otherJob.membershipSync.quartzCron = 0 0/30 * * * ?

# this is the key in the grouper.client.properties that represents this job

# {valueType: "string"}

# otherJob.membershipSync.grouperClientTableSyncConfigKey = memberships

# fullSyncFull, fullSyncGroupings, fullSyncChangeFlag, incrementalAllColumns, incrementalPrimaryKey

# {valueType: "string"}

# otherJob.membershipSync.syncType = fullSyncFull

Logging

Make sure log4j jar is in the classpath, and configure log4j.properties

log4j.logger.edu.internet2.middleware.grouperClient.jdbc.tableSync.GcTableSyncLog = DEBUG, grouper_stdout log4j.additivity.edu.internet2.middleware.grouperClient.jdbc.tableSync.GcTableSyncLog = false

You will see entries like this. Note, there is an entry every minute for in progress jobs (finalLog: false)

2019-05-06 09:13:57,246: [Thread-20] DEBUG GrouperClientLog.debug(92) - - fullSync: true, key: personSourceTest, finalLog: false, state: inserts, databaseFrom: grouper, tableFrom: testgrouper_sync_subject_from, databaseTo: grouper, tableTo: testgrouper_sync_subject_to, totalCountFrom: 25000, fromGroupingUniqueValues: 25000, groupingsToDelete: 0, numberOfBatches: 3, currentBatch: 2, rowsSelectedFrom: 25000, rowsSelectedTo: 0, sqlBatchExecute: 13 of 25 2019-05-06 09:14:45,341: [main] DEBUG GrouperClientLog.debug(92) - - fullSync: true, key: personSourceTest, finalLog: true, state: deletes, databaseFrom: grouper, tableFrom: testgrouper_sync_subject_from, databaseTo: grouper, tableTo: testgrouper_sync_subject_to, totalCountFrom: 25000, fromGroupingUniqueValues: 25000, groupingsToDelete: 0, numberOfBatches: 3, currentBatch: 2, rowsSelectedFrom: 25000, rowsSelectedTo: 0, sqlBatchExecute: 24 of 25, rowsNeedInsert: 25000, took: 0:01:48.181 2019-05-06 09:14:45,692: [main] DEBUG GrouperClientLog.debug(92) - - fullSync: true, key: personSourceTest, finalLog: true, state: deletes, databaseFrom: grouper, tableFrom: testgrouper_sync_subject_from, databaseTo: grouper, tableTo: testgrouper_sync_subject_to, totalCountFrom: 25000, fromGroupingUniqueValues: 25000, toGroupingUniqueValues: 25000, groupingsToDelete: 0, numberOfBatches: 3, currentBatch: 2, rowsSelectedFrom: 25000, rowsSelectedTo: 25000, rowsWithEqualData: 25000, took: 0:00:00.330 2019-05-06 09:14:46,032: [main] DEBUG GrouperClientLog.debug(92) - - fullSync: true, key: personSourceTest, finalLog: true, state: deletes, databaseFrom: grouper, tableFrom: testgrouper_sync_subject_from, databaseTo: grouper, tableTo: testgrouper_sync_subject_to, totalCountFrom: 25000, fromGroupingUniqueValues: 25000, toGroupingUniqueValues: 25000, groupingsToDelete: 1, sqlBatchExecute: 0 of 1, numberOfBatches: 3, currentBatch: 2, rowsSelectedFrom: 25000, rowsSelectedTo: 24999, rowsWithEqualData: 24998, rowsNeedInsert: 1, rowsNeedUpdate: 1, took: 0:00:00.318

Example: sync subject source

- Setup view.

- Setup databases in grouper.client.properties (see memberships example)

Configure the table sync in grouper.client.properties

# grouper client database key where copying data from # {valueType: "string"} grouperClient.syncTable.personSource.databaseFrom = pcom # table or view where copying data from # {valueType: "string"} grouperClient.syncTable.personSource.tableFrom = PERSON_SOURCE # grouper client database key where copying data to # {valueType: "string"} grouperClient.syncTable.personSource.databaseTo = awsDev # table or view where copying data to # {valueType: "string"} grouperClient.syncTable.personSource.tableTo = PERSON_SOURCE_TEMP # columns must match in from and to tables, you can specify columns or do all with an asterisk # {valueType: "string"} grouperClient.syncTable.personSource.columns = * # if there is a primary key, list it, else list the composite keys # {valueType: "string"} grouperClient.syncTable.personSource.primaryKeyColumns = penn_id # the grouping column is what is uniquely selected, and then batched through to get data. # {valueType: "string"} grouperClient.syncTable.personSource.groupingColumn = penn_id # the grouping column is what is uniquely selected, and then batched through to get data, defaults to 10000 # {valueType: "integer"} grouperClient.syncTable.personSource.groupingSize = 10000 # size of jdbc batches # {valueType: "integer"} grouperClient.syncTable.personSource.batchSize = 50Setup an other job to run this in grouper-loader.properties

# Object Type Job class # {valueType: "class", mustExtendClass: "edu.internet2.middleware.grouper.app.loader.OtherJobBase", mustImplementInterface: "org.quartz.Job"} otherJob.personSourceSync.class = edu.internet2.middleware.grouper.app.tableSync.TableSyncOtherJob # Object Type Job cron # {valueType: "string"} otherJob.personSourceSync.quartzCron = 0 * * * * ? # this is the key in the grouper.client.properties that represents this job otherJob.personSourceSync.grouperClientTableSyncConfigKey = personSource