Survival in a World of Zero Trust

Try the following Thought Experiment. Take 100 random users, put them in a room, and ask them to log into your favorite federated web app. If you tell them to first go create an account with some IdP of Last Resort, they will probably groan and quite likely not grok the value of what you are trying to demonstrate. Indeed.

Instead tell them to choose their favorite social IdP on the discovery interface. This will immediately win over a sizable proportion of your audience who will blithely log into your app with almost zero effort (since most social IdPs will happily maintain a user session indefinitely).

However, as Jim Fox demonstrated on the social identity mailing list the other day, not all users feel comfortable performing a federated login at a social IdP. Some users have a healthy distrust for social IdPs, and moreover, that lack of trust is on the rise. So be it.

What can we conclude from this Thought Experiment? Here's my take. Bottom line: Federation is Hard. By no means is the Federated Model a done deal. It may or may not survive, and moreover, I can't predict with any accuracy what will prevail.

That said, I believe in the Federated Model and I want it to work in the long run, so here's what I think we should do in the short term. The appearance of social IdPs on the discovery interfaces of Federation-wide SPs is an inevitability. The sooner we do it, the better off we as users will be. For some (many?), this will simplify the federation experience, and we dearly need all the simplification we can get.

We don't need any more IdPs of Last Resort in the wild, at least not until the trust issues associated with IdPs have been worked out. I'm talking of course about multifactor authentication, assurance, user consent, and privacy, all very hard problems that continue to impede the advance of the Federated Model. In today's atmosphere of Zero Trust, it makes absolutely no sense to keep building and relying on password-based SAML IdPs. That elusive One IdP That Rules Them All simply doesn't exist. We need something better. Something that's simple, safe, and private.

If you're still reading this, you'll want to know what the viable alternatives are. Honestly, I don't know. All I can say is that I'm intrigued by the user centric approaches of the IRMA project and the FIDO Alliance. If similar technologies were to proliferate, it would be a death knell for the centralized IdP model. In its place would rise the Attribute Authority, and I don't mean the SSO-based AAs of today. I mean standalone AAs that dish out attribute assertions that end users control. This is the only approach I can see working in a World of Zero Trust.

Certificate Migration in Metadata

In the last few months, InCommon Operations has encountered two significant interoperability “incidents” (but it is likely there were others we didn’t hear about) that were subsequently traced to improper migration of certificates in metadata. When migrating certificates in metadata, site administrators are advised to carefully follow the detailed processes documented in the wiki. These processes have been shown to maintain interoperability in the face of certificate migration, which tends to be error prone.

Of particular importance is the migration of certificates in SP metadata since a mistake here can affect users across a broad range of IdPs (whereas a mistake in the migration of a certificate in IdP metadata affects only that IdP’s users). When migrating a certificate in SP metadata, the most common mistake is to add a new certificate to metadata before the SP software has been properly configured. This causes IdPs to unknowingly encrypt assertions with public keys that have no corresponding decryption keys configured at the SP. A major outage at the SP will occur as a result.

Another issue we’re starting to see is due to increased usage of Microsoft AD FS at the IdP. As it turns out, AD FS will not consume an entity descriptor in SP metadata that contains two encryption keys. To avoid this situation, the old certificate being migrated out of SP metadata should be marked as a signing certificate only, which avoids any issues with AD FS IdPs. See the Certificate Migration wiki page for details.

Please share this information with your delegated administrators. By the way, if you’re not using delegated administration to manage SP metadata, please consider doing so since this puts certificate migration in the hands of individuals closest to the SP.

Social Identity: A Path Forward

Social Identity was a hot topic at Identity Week in San Francisco last November. Some of the participants questioned the wisdom of Social Identity in the first place: Do we really want to give the keys to the kingdom to Google? Wouldn’t it be in the Federation's best interest to continue to encourage campuses to deploy their own IdPs?

I think we can have our cake and eat it too if we properly limit the scope of a centralized Social Gateway for all InCommon participants.

First, what problem are we trying to solve? The primary motivation for a centralized Social Gateway is to attract Federation-wide SPs to join InCommon. Jim Basney says it best: Given the current penetration of InCommon within US higher education (approximately 20% of US HE institutions operate InCommon IdPs), a “catch-all” IdP is essential to provide a complete federation solution. A Social Gateway would go a long way towards filling this gap.

Second, what problems are we trying to avoid? Most importantly, we want campuses to continue their orderly transition to a federated environment, and so a centralized gateway should not be seen as an alternative to deploying a local IdP.

Not surprisingly, there is also a privacy concern. Like most other Social IdPs in this post-Snowden era, Google is seen by many as a privacy risk. To minimize this risk, we can (and should) limit the attributes that transit the gateway regardless of the attributes Google actually asserts. Moreover, note that a social gateway is inherently privacy-preserving in the sense that it masks (from Google) the end SPs the user visits.

With that background, consider the following proposed centralized Google Gateway for all InCommon participants:

- lightweight deployment

- reassert email and person name and that’s all; any other attributes asserted by Google are routinely dropped on the Gateway floor

- manufacture and assert ePPN at the Gateway

- no extra attributes, no trust elevation, no invitation service

Such a gateway can not be used in lieu of a campus IdP. If a campus wants to go that route, presumably it can deploy a campus-based gateway on its own.

It is well known that a service provider can request attributes by reference using the SAML AuthnRequest Protocol. Usually the reference is to an <md:AttributeConsumingService> element in metadata, but as discussed in a recent blog post on data minimization, metadata is not required. The identity provider may map the reference to an attribute bundle in whatever way makes sense.

Below we describe a non-use case for the <md:AttributeConsumingService> element in the InCommon Federation. In this case, the service provider doesn't request attributes at all. Instead, the identity provider releases a predefined bundle of attributes to all service providers in a class of service providers called an Entity Category.

There is a class of service providers called the Research and Scholarship Category of service providers (SPs). Associated with the Research and Scholarship (R&S) Category is an attribute bundle B. An identity provider (IdP) supports the R&S Category if it automatically releases attribute bundle B to every SP in the R&S Category.

Every SP in the R&S Category is required to have an <md:AttributeConsumingService> element in its metadata. Now suppose an SP encodes attribute bundle A in metadata such that A is a subset of B. In other words, the SP requests fewer attributes than what the IdP has agreed to release. In this case, an IdP can choose to release A and still support the R&S Category.

Although the software supports this behavior, few (if any) IdPs are configured this way. Instead, IdPs release B to all SPs regardless of the attributes called out in SP metadata. Why? Well, it's easier for the IdP to release B across the board but there's another more important reason that depends on the nature of attribute bundle, so let me list the attributes in the bundle along with some sample values:

eduPersonPrincipalName:trscavo@internet2.edu

mail:trscavo@internet2.edu

displayName:Tom Scavo OR (givenName:Tom AND sn:Scavo)

Note that all of the attributes in this bundle are name-based attributes. If you're going to release one, you may as well release all of them since each attribute valu encodes essentially the same information. This is why IdPs choose to release the entire bundle across the board: there are no privacy benefits in releasing a strict subset, and so it's simply easier to release all attributes to all R&S SPs.

I'm sure one could come up with a hypothetical use case for which there are real privacy benefits in releasing subset A of bundle B but we haven't bumped into such a use case yet. In any event, note the following:

- AFAIK, no software implementation supports more than one

<md:AttributeConsumingService>element in metadata so there isn't much point in calling out the index of such an element in the<samlp:AuthnRequest>.

- Use of the

AttributeConsumingServiceIndexXML attribute as described in the blog post is interesting, but entity attributes give the same effect, and moreover, entity attributes are in widespread use today (at least in higher ed).

- I doubt any IdP in the InCommon Federation would be inclined to implement a liberal attribute release policy such as "release whatever attributes are called out in the

<md:AttributeConsumingService>element in metadata" since this is a potentially serious privacy leak.

This leads to the following prediction: the <md:AttributeConsumingService> element in metadata and the AttributeConsumingServiceIndex XML attribute in the <samlp:AuthnRequest> will turn out to be historical curiosities in the SAML protocol. At this point, the best approach to attribute release appears to be the Entity Category (of which the R&S Category is an example).

ForceAuthn or Not, That is the Question

Depending on your point of view, SAML is either a very complicated security protocol or a remarkably expressive security language. Therein lies a problem. A precise interpretation of even a single XML attribute is often elusive. Even the experts will disagree (at length) about the finer points of the SAML protocol. I’ve watched this play out many times before.

In this particular case, I’m referring to the ForceAuthn XML attribute, a simple boolean attribute on the <samlp:AuthnRequest> element. On the surface, its interpretation is quite simple: If ForceAuthn="true", the spec mandates that “the identity provider MUST authenticate the presenter directly rather than rely on a previous security context.” (See section 3.4.1 of the SAML2 Core spec.) Seems simple enough, but a google search will quickly show there are wildly differing opinions on the matter. (See this recent discussion thread re ForceAuthn on the Shibboleth Users mailing list, for example.) I’ve even heard through the grapevine that some enterprise IdPs simply won’t do it. By policy, they always return a SAML error instead (which is the IdP’s prerogative, I suppose).

For enterprise SPs, such a policy is one thing, but if that policy extends outside the enterprise firewall...well, that’s really too bad since it reduces the functionality of the SAML protocol unnecessarily. OTOH, an SP that always sets ForceAuthn="true" is certainly missing the point, right? There must be some middle ground.

Here’s a use case for ForceAuthn. Suppose the SP tracks the user's location via IP address. When an anonymous user hits the SP, before the SP redirects the browser to the IdP, the IP address of the browser client is recorded in persistent storage and a secure cookie is updated on the browser. Using the IP address, the SP can approximate the geolocation of the user (via some REST-based API perhaps). Comparing the user's current location to their previous location, the SP can make an informed decision whether or not the user should be explicitly challenged for their password. If so, the SP sends a <samlp:AuthnRequest> to the IdP with ForceAuthn="true".

Behaviorally, this leads to a user experience like the one I had recently when I traveled from Ann Arbor to San Francisco for Identity Week. When I arrived in San Francisco, Google prompted me for my password, something it rarely does (since I’m an infrequent traveler). Apparently, this risk-based authentication factor protects against someone stealing my laptop, defeating my screen saver, and accessing my gmail. This is clearly a Good Thing. Thank you, Google!

This use case avoids the ForceAuthn problem described above, that is, the SP now has some defensible basis for setting ForceAuthn="true" and the debate between SPs and IdPs goes away. Simultaneously, we raise the level of assurance associated with the SAML exchange and optimize the user experience. It's a win-win situation.

The downside of course is that this risk-based authentication factor is costly to implement and deploy, especially at scale. Moreover, if each SP in the Federation tracks geolocation on its own, the user experience is decidedly less than optimal. But wait…distributed computing to the rescue! Instead of deploying the risk-based factor directly at the SP, we can deploy a centralized service that passively performs the geolocation check described above. There are multiple ways to deploy such a centralized service: 1) deploy the service as an ordinary SAML IdP; 2) deploy the service in conjunction with IdP discovery; or 3) invent a completely new protocol for this purpose. There are probably other ways to do this as well.

Hey, SP owners out there! If such a service existed, would you use it?

On December 18th, InCommon Operations will deploy three new metadata aggregates on a new vhost (md.incommon.org). All SAML deployments will be asked to migrate to one of the new metadata aggregates as soon as possible but no later than March 29, 2014. In the future, all new metadata services will be deployed on md.incommon.org. Legacy vhost wayf.incommonfederation.org will be phased out.

An important driver for switching to a new metadata server is the desire to migrate to SHA-2 throughout the InCommon Federation. The end goal is for all metadata processes to be able to verify an XML signature that uses a SHA-2 digest algorithm by June 30, 2014. For details about any aspect of this effort, see the Phase 1 Implementation Plan of the Metadata Distribution Working Group.

Each SAML deployment in the Federation will choose exactly one of the new metadata aggregates. If your metadata process is not SHA-2 compatible, you will migrate to the fallback metadata aggregate. Otherwise you will migrate to the production metadata aggregate or the preview metadata aggregate, depending on your deployment. You can find more information about metadata aggregates on the wiki.

Questions? Subscribe to our new mailing list and/or check out our FAQ:

Help: help@incommon.org

FAQ: https://spaces.at.internet2.edu/x/yoCkAg

To subscribe to the mailing list, send email to sympa@incommon.org with this in the subject: subscribe metadata-support

An Overview of the Duo Security Multifactor Authentication Solution

Duo Security supports three classes of authentication methods:

- Duo Push

- Standard OATH Time-based One-Time Passcodes (TOTP)

- Telephony-based methods:

- OTP via SMS

- OTP via voice recording

- Voice authentication

The three classes of methods have different usability, security, privacy, and deployability characteristics.

Usability

Duo Push is highly usable. When people think of Duo, they usually think of Duo Push since there are numerous video demos of Duo Push in action. (Most authentication demos are boring, as you know, but Duo Push is an exception.)

Duo voice authentication is also highly usable. It is certainly less flashy (and it has other disadvantages as well) but its usability is more or less the same as Duo Push.

Standard OATH TOTP built into the Duo Mobile native mobile app is less usable. Transcribing a six-digit passcode will get old quickly if the user is asked to do it too often.

The telephony-based OTP methods are least usable. They are best used as backup methods or in conjunction with some other exceptional event.

Security

Duo Push and OATH TOTP are implemented in the Duo Mobile native app. As such, Duo Mobile is a soft token (in contrast to hard tokens such YubiKey). In general, soft tokens are less secure than hard tokens. (The Duo Mobile native app is not open source and so it’s unknown whether a 3rd-party security audit has been conducted on the software base.)

Duo Push uses asymmetric crypto. The private key is held by the Duo Mobile app. The app also manages a symmetric key used for OATH TOTP. A copy of the symmetric key is also held by the Duo cloud service. As the RSA breach showed, the symmetric keystore on the server is a weakness of OATH TOTP. Indeed, this is one reason why Duo Push was invented.

The above facts give Duo Push a security edge over OATH TOTP. Since both of them are implemented in Duo Mobile, and since the security of the Duo Mobile soft token depends intimately on the underlying operating system (iOS or Android, mainly), the two authentication methods are considered to be more or less equivalent.

The telephony-based methods are less secure, however. Telephony introduces yet another untrusted 3rd party. In fact, teleco infrastructure is considered by many to be hostile infrastructure that is best avoided except perhaps in exceptional situations such as backup scenarios.

Privacy

We consider the following aspects of the privacy question:

- The privacy associated with the user’s phone number

- The privacy characteristics of the Duo Mobile app

- The privacy characteristics of the mobile credential

It should be immediately obvious that the use of any telephony-based method requires the user to surrender one or more phone numbers to Duo. Whether or not that is acceptable depends on the environment of use.

It is worth noting that Duo Mobile itself does not require a mobile phone number. Indeed, Duo Mobile runs on any compatible mobile device, not just smartphones. For example, Duo Mobile runs on iPhones, iPads, and iPod Touch under iOS. Telephony is not strictly required.

It is not known exactly what phone data is collected by the Duo Mobile app. Although the Duo privacy statement appears reasonable to this author, YMMV.

To use the Duo cloud service, one or more mobile credentials are required. For the telephony-based methods, there is a binding between the user’s phone number and the Duo service, as already discussed.

To use Duo Mobile with the Duo service, a binding between some user identifier and the Duo service is required. Any simple string-based identifier will satisfy this requirement. The identifier may be opaque but that would render the Duo Admin web interface significantly less useful. Unless you intend to use the Duo Admin API, a friendly identifier such as email address or eduPersonPrincipalName is recommended, but even this can be avoided, as noted below.

If you prefer, you can persist the opaque Duo identifier created at the time of enrollment. This precludes the need to pass a local identifier to the Duo service; just pass the Duo identifier instead.

Deployability

Generally speaking, Duo has flexible deployment options. How you deploy Duo depends on your particular usability, security, and privacy requirements (see above).

Authentication

Duo technology is designed to be deployed at the service. For example, Duo supports VPNs (multiple vendors), off-the-shelf web apps (WordPress, Drupal, Confluence, etc.), custom web apps (via an SDK), Unix, and ssh.

Here are some integration tips:

- To integrate with a custom web app, use the Duo Web SDK to obtain a mix of authentication options including Duo Push, voice authentication, and one-time passcodes via Duo Mobile. The Duo Web interface also accepts one-time passcodes previously obtained by some out-of-band method, and in fact, the web interface itself allows the user to obtain a set of emergency passcodes on demand.

- If telephony-based methods are not an option, for either security or privacy reasons, integrate Duo into your web app using the low-level Duo Auth API.

- If you want to add a second factor to a non-web app, say, an email-based password reset function, consider using the Duo Verify API.

Out of the box, Duo integrates with enterprise SSO systems based on Shibboleth or Microsoft AD FS. Integration with other SSO systems is possible using the SDK or any of the APIs. For example, the Duo Shibboleth Login Handler uses the Web SDK.

Enrollment

Like authentication, how you enroll your users depends on your requirements:

- Since the Duo Web SDK incorporates telephony-based methods, the enrollment process must bind the user’s phone number with the Duo service. This is usually done by sending a link to the user’s mobile device vis SMS.

- To avoid telephony-based methods, the user scans a Qr code with the Duo Mobile app after authenticating with a username and password. While this has advantages, it requires the use of the low-level Auth API and is limited to mobile devices that support the native app (iOS, Android, etc.).

It is assumed that most of your users will enroll automatically via one of the methods mentioned above, but manual enrollment is possible. The Duo Admin web interface is provided for this purpose. The Duo Admin API is also provided so that admin functions can be accessed outside of the web interface.

Overheard yesterday:

If I can't use Duo Push, then I may as well use Google Authenticator.

Duo Mobile is a native mobile app. Duo Push is a capability of Duo Mobile on certain devices (iOS, Android, etc.). Duo Mobile protects two secrets: a symmetric key and a 2048-bit public/private key pair. The latter is used to secure the active, online, Duo Push authentication protocol.

In addition to Duo Push, the Duo Mobile app supports standard OATH TOTP, which requires a symmetric key. One copy of the symmetric key is protected by the Duo Mobile app while another copy is protected by the Duo cloud service. The two work together to provide a standard, offline, one-time password authentication protocol.

Google Authenticator supports OATH TOTP as well but the similarity with Duo Mobile ends there. Google offers no service analogous to the Duo cloud service, nor is there a server-side app you can run on-premise (but check out an open-source OATH TOTP server developed by Chris Hyzer at Penn). Google Authenticator does come with a PAM module but it is not enterprise grade by any means.

Bottom line: If you want to install an app on your mobile device that supports OATH TOTP, choose Duo Mobile. It's free, it works with all OATH TOTP-compatible servers (Google, Dropbox, AWS, etc.), it is well supported, and it does so much more (such as Duo Push, if and when you need it).

Multifactor Authentication in the Extended Enterprise

There are known pockets of multifactor in use in higher ed today but more often than not multifactor is deployed at the service itself. This is entirely natural since the SP has a vested interest in its own security. This tendency is often reflected in the technology solutions themselves, which are primarily enterprise authentication technology solutions at best.

Given a particular authentication technology, the decision whether or not to deploy is a complicated one. Two specific questions tend to select the most flexible solutions:

- Does the authentication technology solution integrate with the enterprise SSO system?

- Can the authentication technology solution be delivered “as-a-service?”

Let’s assume the answer to the first question is yes (an assumption on which the rest of this article is based). If the answer to the second question is no, we can conclude that the technology is limited to the enterprise, which means that one or the other of the SSO system or the authentication technology solution is limited to the enterprise. Regardless of the answers, this pair of questions tends to clarify the scope of the deployment so that there are no surprises down the road.

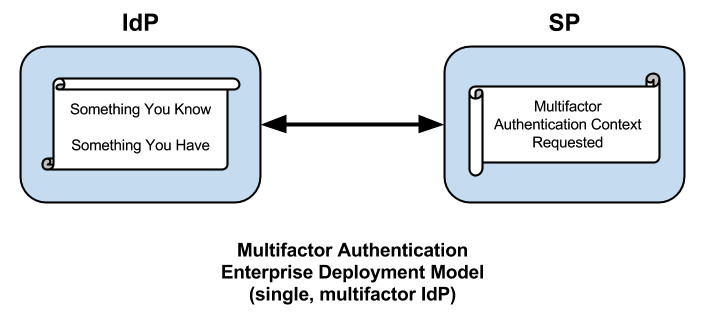

Enterprise Multifactor Authentication

In a federated scenario, the typical approach to multifactor authentication is to enable one or more additional factors in an existing enterprise IdP deployment that already authenticates its users with a username and password. This, too, is a natural thing to do, and it does have some advantages (to be discussed later) but this approach has at least one major drawback and, that is, it assumes all your users are managed by a single IdP. That’s a showstopper for any use case that touches the extended enterprise.

Patrick Harding refers to the notion of the extended enterprise in a recent article entitled Vanishing IT Security Boundaries Reappearing Disguised As Identity. Although the article itself focuses on identity technology, it’s the title that’s relevant here, which suggests that the limitations of the traditional security perimeter, typified by the firewall, give rise to the twin notions of identity and the extended enterprise. Neither is hardly a new concept but the notion highlights the limitations of what might be called the enterprise deployment model of multifactor authentication.

Integrating multifactor into the enterprise IdP is a definite improvement but it's still not good enough. How many times do you authenticate against your IdP in a typical work day? That's how many times you'll perform multifactor authentication, so your multifactor solution had better have darn good usability.

The fact is, unless your multifactor solution is passive, it will be less usable. The tendency will be to use the IdP's browser session to short-circuit an authentication request for multifactor. This is easier said, than done, however. Session management at the IdP was built with single factor authentication in mind. It'll be some time before SSO regains its footing at the IdP.

In the sections that follow, we show how to extend the enterprise deployment model in a couple of interesting and useful directions.

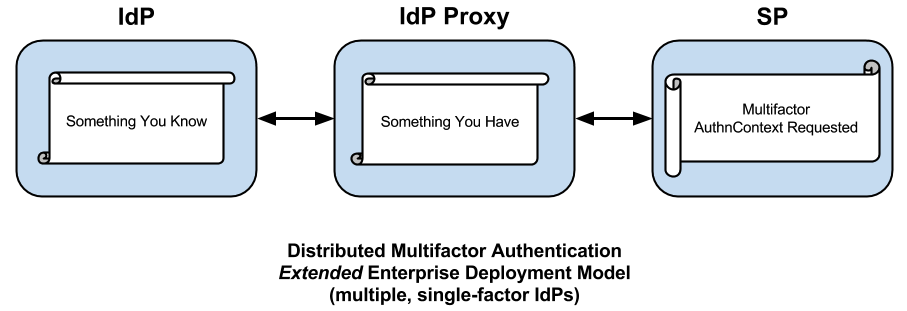

Distributed Multifactor Authentication

The core SAML spec alludes to a role called an IdP Proxy, apparently referring to a kind of two-faced IdP embedded in a chain of IdPs, each passing an assertion back to the end SP, perhaps consuming and re-issuing assertion content along the way. Since the SAML spec first appeared in 2005, the IdP Proxy has proven itself in production hub-and-spoke federations worldwide (particularly in the EU), but the idea of an IdP Proxy coupled with a multifactor authentication solution has only recently appeared. In particular, SURFnet began a project to deploy a Multifactor IdP Proxy in the SURFconext federation late in 2012. The Multifactor IdP Proxy enables what we call distributed multifactor authentication.

Consider the following use case. Suppose a high-value SP will only accept assertions from IdPs willing and able to assert a multifactor authentication context. Users, and therefore IdPs, are scattered all across the extended enterprise. Even if all the IdPs belong to the same federation, the trust problem becomes intractable beyond a mere handful of IdPs. A Multifactor IdP Proxy may be used to ease the transition to multifactor authentication across the relevant set of IdPs.

The Multifactor IdP Proxy performs two basic tasks: 1) it authenticates all browser users via some “what you have” second factor, and 2) it asserts a multifactor authentication context (plus whatever attributes are received from the end IdP). The Multifactor IdP Proxy we have in mind is extremely lightweight—it asserts no user attributes of its own and is therefore 100% stateless.

More importantly, the Multifactor IdP Proxy precludes the need for each IdP to support an MFA context. Instead each IdP can continue doing what it has been doing (assuming the existence of a sufficiently strong password in the first place). For its part, the SP need only trust one IdP partner to perform MFA, that is, the Multifactor IdP Proxy. If necessary, the SP can deploy the Multifactor IdP Proxy itself. In either case, the SP meets its high level of assurance requirements in relatively short order.

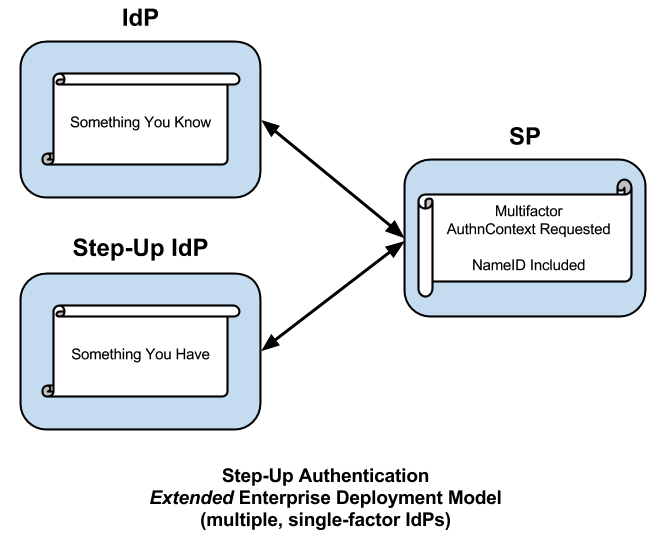

Step-Up Authentication

Consider the following general use case. A federated SP not knowing its users in advance accepts identity assertions from some set of IdPs. The SP trusts the assertion upon first use but—the network being the hostile place that it is—the SP wants assurances that the next time it sees an assertion of this identity, the user is in fact the same user that visited the site previously. The SP could work with its IdP partners to build such a trusted federated environment (or a federation could facilitate that trust on the SP’s behalf), but frankly that’s a lot of work, and moreover, all of the IdPs must authenticate their users with multiple factors before such a trusted environment may be realized. Consequently the SP takes the following action on its own behalf.

Instead of leveraging an IdP Proxy, or perhaps in addition to one, the SP leverages a distributed IdP for the purposes of step-up authentication. When a user arrives at the site with an identity assertion in hand, the SP at its discretion requires the user to authenticate again. This might happen immediately, as the user enters the front door of the site, or it might happen later, when the user performs an action that requires a higher level of assurance. The timing doesn’t matter. What matters is that the SP adds one or more additional factors to the user’s original authentication context, thereby elevating the trust surrounding the immediate transaction.

Comparative Analysis

Each of the methods described above has at least one disadvantage:

- Enterprise MFA is relatively difficult to implement. In general, the login handlers associated with multifactor IdPs must implement complex logic. SSO will suffer unless session management at the IdP is up to the task.

- Login interfaces for multifactor IdPs tend to be more complicated (but done right, this can turn into an advantage).

- Some user attributes cross the IdP Proxy more easily than others. In particular, scoped attributes present significant issues when asserted across the IdP Proxy.

- The IdP Proxy represents a single point of failure and a single point of compromise. Compensating actions at the IdP Proxy may be required.

- Since the SP must assert user identity to the step-up IdP, a non-standard authentication request may be required. Moreover, depending on the authentication technology solution in use, the SP may have to sign the authentication request.

On the other hand, the extended enterprise deployment models have some distinct advantages:

- Since distributed MFA and step-up authentication incorporate single-factor IdPs, these solutions (compared to enterprise MFA IdPs) are significantly easier to implement and deploy.

- For both distributed MFA and step-up authentication, the trust requirements of IdPs throughout the extended enterprise are minimized. Indeed, an IdP can continue to do what it already does since higher levels of assurance at the IdP are not required (but can be utilized if present).

- If necessary, the SP can assume at least partial ownership of its own trust requirements. The deployment strength of the step-up IdP can be completely determined by the SP. No external multifactor dependencies need to exist.

- By separating the factors across multiple IdPs, flexible distributed computing options are possible. In particular, Multifactor Authentication as-a-Service (MFAaaS) becomes an option.

The latter pair of advantages may be significant for some use cases. Unlike the enterprise deployment model (which relies on a single IdP), the extended enterprise surrounding the SP may leverage multiple IdPs. Moreover, those IdPs can live in different security domains, which is what makes MFAaaS possible.

In an intriguing blog post, Anil John laments the “sad state of affairs” where we find that public sector entities across the finance, government, and healthcare sectors “have not done an equivalent job of vouching for information they create and manage in the electronic world as they do in the physical world.” As a result, commercial sector entities, in particular the social media providers, are filling the gap by providing online services that invariably monetize user data at the expense of user privacy.

To expand on this theme, the Education Sector is perhaps another public sector “cylinder of excellence,” and yes, Education might “step up” to the challenge as well. Indeed, it would be in Education’s best interest to step out of its silo and assume the role of “a validation authority or an attribute provider,” which John rightly observes is generally lacking in cybersphere.

For example, educational institutions issue transcripts, certificates, and diplomas in the physical world but I know of no instance where this is done effectively in the digital world. A digital representation of a diploma, say, could be signed with the institution’s private signing key and distributed to graduates for little or no cost. Upon the graduate’s approval, that same digitally signed diploma could be distributed to other interested parties (e.g., prospective employers) for a small fee, thereby recouping the investment the institution must ultimately make to achieve a certified level of assurance for the identity processes underlying the use of its signing key.

Of course, if this were easy, it would already have been done. I will note, however, that hundreds of educational institutions already have private signing keys, which today are used almost exclusively to issue SAML bearer tokens for federated SSO transactions. The corresponding public keys are distributed via a trusted SAML metadata aggregate signed by InCommon, well known to be an identity federation for research and education in the US. Finally, an assurance framework whereby issuers attain the required level of assurance already exists in the Education Sector as well. Taken together, these observations suggest that a signification portion of the work has already been done.

Along these lines, some interesting and relevant projects are already underway. One such example, a forward-looking project called I Reveal My Attributes (IRMA) aims to put digitally signed attribute assertions on smart cards so that students and other users can prove statements about themselves in a privacy-preserving manner, statements that today are merely self-asserted. For more information, see SURFnet’s recorded session at the 2013 Internet2 Annual Meeting. (The IRMA presentation, which includes a demo of the smart card in action, starts at 23:00 minutes into the session recording.)

Two-Factor Authentication Is Not The End Game

Of late, Twitter has taken a lot of heat in cyberspace for its hasty rollout of two-factor authentication (2FA). Part of this criticism is justified but some of it is simply due to the fact that 2FA is coming of age. Deployers, observers, and pundits are finally coming to grips with the strengths and the weaknesses of typical 2FA deployments.

Criticisms leveled at Twitter in the last couple of weeks include:

- Usability: SMS passcodes are not particularly usable

- Multiuser Accounts: still no support for multiuser Twitter accounts

- Mobile Apps: Twitter native apps on “smart” mobile devices will break

- Mobile Malware: smartphone-based malware can be used to steal SMS passcodes

- Telephony: attackers can subvert the SIM card or the phone itself

- Account Recovery: it’s too easy for a Twitter user to permanently lose control of their account

Let’s look at each of these criticisms one at a time.

Usability

If every login event required a one-time passcode, 2FA would get old very, very quickly. Many 2FA solutions work around this annoyance by "remembering" a genuine 2FA event in some fashion, often using cookies, which allows the second factor to be bypassed subject to policy (which may be hard or soft depending on the flexibility of the solution). AFAIK all one-time password schemes have this problem. This includes the lion’s share of what’s out there today since the OATH standard, which is widely deployed (Google, Dropbox, AWS, etc.), requires an identical user operation. So this criticism is not Twitter-specific; it applies to most 2FA schemes in existence today.

Multiuser Accounts

Observers are starting to realize that multiple factors strongly discourage password sharing. (Some observers predicted this side effect of 2FA before Twitter made it a popular topic.) In some cases, 2FA is intentionally deployed to thwart password sharing, but in the case of Twitter, multiuser accounts are seen as a highly desirable feature, so precisely those accounts that require increased security (i.e., enterprise accounts), will tend not to enable the Twitter 2FA option. We’re already starting to see non-Twitter solutions (e.g., a browser plug-in that juggles passwords apart from Twitter) address this limitation of the Twitter service.

The real issue here is:

authentication != authorization

Twitter makes the fundamental mistake of equating authentication with authorization, and therein lies the problem. Until that’s fixed, any attempt to enable multiuser Twitter accounts will seem like a kludge.

Btw, federation tends to promote (maybe "force" is a better word) good identity management, so if you want to separate authentication from authorization in your service offering (and you probably do), bite the bullet and federate your service. Besides, you don’t really want to issue passwords to your users, do you? (Twitter, are you listening?)

Mobile Apps

At a recent mobile dev workshop, a presenter gave a nice overview of mobile security but then went on and showed the devs in the audience how to use Basic Auth as a practical example. Interest in this topic ran high and the room was abuzz when the presenter open sourced his code right then and there.

Basic Auth is a big mistake. As is well known, 2FA breaks mobile apps secured with user passwords. To compensate, Google, Apple, and others have introduced "application specific passwords" (ASPs) as an alternative. ASPs are somewhat better than user passwords for securing mobile apps but current implementations are lacking. In particular, Twitter’s implementation of ASPs is severely limited, so beware.

OpenID Connect (based on OAuth2) may turn out to be a viable solution to this problem (but only time will tell). OIDC will drop an OAuth2 access token on a client application as a by-product of web SSO. Think of this as automated distribution of ASPs. We’ll see if OIDC catches on with mobile devs, however. Like I said, we’re not quite there yet.

But wait, ASPs don’t provide strong authentication in any case, so why bother? I can’t answer that. There’s talk of fingerprint readers, iWatch, and a finger ring from Google, but I think it’s fair to say that mobile device security is far from being a solved problem. There’s a lot of room for innovation in this space.

Mobile Malware

Is (or will) mobile malware become a problem? Absolutely, but malware isn’t focused exclusively on SMS so this criticism of Twitter’s deployment is goes beyond 2FA via SMS. Security strategies that protect the whole device are needed, but the mobile device is poised to become the next battlefield, to be sure.

Telephony

An authentication scheme that incorporates telephony (SMS, voice calls, etc.) introduces yet another untrusted 3rd party into the security equation. Indeed, subverting the telco provider has proven to be relatively easy (and so far the telcos have had little incentive to fix the problem). OTOH, the telcos have an important role to play. After all, my telco provider strongly verified my identity when I created my account, so they are in a good position to be a trusted source of attributes. That said, it remains to be seen if telephony can provide strong authentication. I rather doubt it.

Account Recovery

In its most recent 5-year plan for strong authentication, Google characterized account recovery as the "Achilles' heel" of its 2FA deployment. Indeed, compared to what Twitter provides, Google’s approach to account recovery is stellar. This is one area where Twitter definitely needs to do a better job.

Conclusion

So we see that most of the criticisms leveled at Twitter are general shortcomings of typical 2FA deployments. Sure, Twitter can (and no doubt will) improve their deployment, but that shouldn’t be their end game in any case. As Bruce Schneier observed about 2FA over 8 years ago:

Two-factor authentication is a long-overdue solution to the problem of passwords. I welcome its increasing popularity, but identity theft and bank fraud are not results of password problems; they stem from poorly authenticated transactions. The sooner people realize that, the sooner they'll stop advocating stronger authentication measures and the sooner security will actually improve.

In other words, protect the tweet separate from the Twitter account. This is the only strategy that will prevent the Twitter breaches that we’ve witnessed in recent weeks.

Two Services Added to Research and Scholarship Category

Two services been approved for the InCommon Research and Scholarship Category (R&S). R&S allows participating identity providers to release a minimal set of attributes to an entire group of services, rather than negotiating attribute release one-by-one. The new R&S services include:

- Collaboration Wiki Spaces at Internet2, a federated wiki for researchers and working groups collaborating on projects of interest to the Internet2 community

- Narada Metrics, a service offered by the Ohio Technology Consortium (OH-TECH), providing a method for multi-domain network operators and big data researchers to share network and system performance data

Service providers (SPs) eligible for the R&S category support research and scholarship services for the InCommon community. Participating identity providers (IdPs) agree to release a minimal set of attributes to R&S SPs (person name, email address, user identifier) after making a one-time configuration to the IdP’s default attribute release policy. This provides a simpler and more scalable approach to federation than negotiating attribute release individually with every service provider.

With the addition of these new services, there are now 10 R&S SPs. Also, 38 IdPs have indicated support for the R&S category. A complete list of R&S services and the IdPs that support R&S is maintained on the InCommon web site. See the InCommon wiki for See the InCommon wiki for more information about the R&S Category.

Bring Your Own Token - Part 2

By Tom Scavo, InCommon Operations Director

In Part 1 of this series we suggest that mobile-based two-factor authentication is a viable alternative to password-based authentication methods. Here in Part II, we discuss the well-known dangers of password reset and describe a mitigating strategy that leverages a privileged phone to securely manage access to the password reset webapp.

A secure password reset process will resist an attacker that possesses a lost or stolen mobile device. This argues strongly against email-based password reset since we have to assume the user’s password is compromised if the phone is lost (as discussed in Part I). The recent hacking of Mat Honan confirms (yet again) that security processes “that center around email instead of identity are hopelessly outdated and dangerously flawed.” [2] In particular, a password reset process that relies on email alone is a recipe for disaster.

In general, how do we protect against lost or stolen mobile devices? Our first line of defense (as suggested in Part I) is to passcode-protect all mobile devices. (Btw, when will fingerprint readers become commonplace on mobile devices?) To this end, we can and should consider an institutional bring-your-own-token (BYOT) program (also discussed in Part I), but since the success of such a program depends on a cooperative and diligent end user, we know that complete 100% coverage is impossible. What else can we do?

General Strategy

Everyone seems to be calling for the end of passwords these days. For example, Jeremy Grant, Senior Executive Advisor for Identity Management at NSTIC, has made it clear that passwords alone are not enough. Yet password technology stubbornly persists in cyberspace. What will it take to usurp the password as the dominant authentication technology? The reader is invited to study the work of Bonneau et al., which sheds light on this vexing problem.

Just to be clear, it’s not realistic to think we can actually get rid of passwords. We do, however, very much want to stop accepting claims based on password authentication alone, by layering a “what you have” factor on top of a typical “what you know” password. It’s pretty clear that the thing people have is a mobile device, so we start there. Could it be that mobile is poised to make a dent in this 20-year old problem space?

Likewise we don’t want to get rid of email-based password reset but we can no longer allow a password reset based solely on the user’s control of an email account. Since mobile devices tend to have “always on” email services, this is becoming a critically obvious conclusion.

Isn’t that strange? Mobile is at once both our savior and our curse.

So what do we layer on top of ordinary email-based password reset to make it more secure? Well, let’s see what we can do with another phone. Note that I didn’t say “smartphone” or even “mobile phone,” but in any case, the phone involved in the password reset process is necessarily different than the phone used for everyday two-factor authentication.

Two-Factor Password Reset

The basic idea is to layer a second factor on top of the typical password reset process so as to protect against a lost or stolen mobile phone (i.e., the phone used for two-factor authentication) and thereby make password reset more resilient to attack. There’s more than one way to skin this cat, but let’s introduce a second phone and see where it leads. In fact, the best type of phone for this purpose is what I’ll call a non-mobile phone, that is, a phone that can’t be lost. Typically, this would be an office phone or a home phone. More on this point later.

Let’s review. A service provider requires two-factor authentication. The first factor is a simple password (that the user already knows) while the second factor is the user’s mobile phone (that the user already has). The user pre-enrolls a second phone, preferably a non-mobile phone. This backup phone is used for password reset only.

In the event the user loses or forgets their password, or loses their mobile phone, the following steps are taken:

- The user attempts to access the password reset webapp and is prompted to enter their email address.

- The system verifies the entered email address and then sends a custom link in an email message to the user.

- The user clicks the link and is prompted to enter their phone number.

- The system verifies that the entered phone number is correct and then sends a one-time PIN to the user’s backup phone in a recorded voice message.

- The user subsequently accesses the password reset webapp, presents the PIN, and is authorized to reset their password.

- The next time the user attempts to access the service provider, after they have successfully authenticated with their new password, the user will be required to enroll a mobile phone (for the purposes of two-factor authentication). This phone can have the same phone number as the previously lost phone or it can be a new phone altogether.

When all of the above steps are complete, the user will once again be able (and required) to authenticate to the service provider with two factors.

Note that the backup phone is critical to the successful completion of this sequence of steps. In fact, the service provider would be wise to test the backup phone from time to time, by sending a one-time PIN that the user subsequently presents as evidence that the backup is working.

Implementation

Implementation of step 4 above is difficult since it represents a completely independent communication channel (which is exactly what we need for strong two-factor authentication). Fortunately, such services already exist in the marketplace. Duo Security, our technology of choice for mobile-based two-factor authentication, exposes an API called Duo Verify that will send a PIN in a voice message to any phone (or an SMS message to a mobile phone). Because it’s a cloud-based API, Duo Verify can be added to just about any existing web-based password reset process.

Analysis

Email-based password reset is an application of “Simple Authentication for the Web” (SAW), which is analyzed in section IV-C5 of a seminal paper by Bonneau et al. SAW is inherently inefficient from a usability standpoint. Two-factor password reset as described above is even less usable since 1) proximity to a non-mobile phone is required, and 2) the user is required to type the PIN into the webapp. However, since password reset is an exceptional event, it is anticipated that these inefficiencies will go unnoticed by users.

A non-mobile phone is not strictly required for two-factor password reset, but since mobile phones can be lost or stolen, using a mobile phone for password reset carries additional risk. It is up to the deployer to decide whether this risk is tolerable.

Indeed, a phone is not required at all. The user could be issued a one-time PIN that is written on a piece of paper and kept in a safe place (Dropbox does this, e.g.). Other alternatives are possible, all with the same goal: to provide a second factor to be layered on top of email-based password reset.

For faculty and staff, a second phone probably makes sense, but it’s doubtful most students will have a second phone. We’ll have to be more creative in the case of students (which we leave as an exercise for the reader).

Summary

Email-based password reset is analogous to password-based authentication, that is, both have good usability but weak security properties. The goal is to strengthen the security of both at once:

- Layer a second factor on top of password-based authentication to thwart phishing and keystroke loggers. Mobile-based two-factor authentication is an obvious choice.

- To protect against lost or stolen mobile devices, layer a second factor on top of ordinary email-based password reset. A second phone is a good choice for this purpose, but other options (such as paper tokens) are also viable.

Effective strong authentication requires a cooperative effort by all parties. To emphasize the importance of BYOT, the institution should provide monthly stipends to participating users. In return, users should agree to passcode-protect their mobile device and to report a lost or stolen mobile device immediately. These actions raise the bar for the bad guys, resulting in systems that are significantly more resilient to attack.

Acknowledgment. Thanks to Joe St Sauver for helpful comments on an early draft of this article.

Bring Your Own Token

By Tom Scavo, InCommon Operations Director

The "bring-your-own-device" (BYOD) phenomenon reached a crescendo on the Internet early June 2012. A major contributor to the "noise" was no doubt an EDUCAUSE Live webinar that introduced BYOD to a boisterous, record number (388) of eager participants.

As mentioned during the webinar, the first wave of BYOD in higher education is already taking hold at smaller colleges and universities where support staff are limited. It's only a matter of time before mounting economic and social pressures at larger institutions make BYOD the norm. Sure there are challenges, especially security and privacy issues, but you ignore the inevitability of BYOD at your own peril. Like the Borg, resistance is futile.

Surely the proliferation of mobile devices is driving the BYOD phenomenon. When I received my first cell phone more than a dozen years ago, little did I realize how that meager phone would blossom into a full-fledged "smart" communications device with near 100% penetration. Sheesh, what was I thinking?

So now that we have it, what are we going to do with it? In a word, everything. The particular use case I want to explore here is mobile-based two-factor authentication.

Mobile-Based 2FA

Like many of the exciting technology developments of the day, two-factor authentication is riding on the coattails of the mobile phenomenon. In this case, mobile devices represent a pervasive, ubiquitous platform on which to base that elusive second authentication factor.

The ubiquity of mobile, coupled with the prospect of an independent second factor, is a compelling solution to a long overdue problem (that begins with “pass” and ends with “word”). Yes, mobile will become the next battleground (the “good guys” against the “bad guys”), but mobile-based 2FA significantly raises the bar. Instead of phishing (which the bad guys will increasingly abandon), we can expect more of what we saw in conjunction with the CloudFlare compromise, that is, sophisticated social engineering attacks on privileged accounts. As always, let the user beware!

Bring Your Own Token

Thus the term "bring-your-own-token" (BYOT) is born. BYOT is a term for "various [authentication] methods (sometimes called "tokenless") that leverage the devices, applications, and communications channels users already have." In this case, the mobile phone, in particular the "smartphone,” is leveraged as a "what you have" token used in conjunction with two-factor authentication (2FA).

Cloud-based, two-factor authentication services (such as the Duo Security solution) significantly increase security with little (if any) decrease in usability. If you currently deploy a “strong password” solution, mobile-based 2FA can actually increase both security and usability at the same time. Since some of these solutions (like Duo) are cloud-based, these benefits are obtained in return for a very modest deployment cost.

Deployment Considerations

A moment’s worth of reflection should convince you that any authentication service will have deployment concerns, and a BYOT two-factor authentication deployment is no exception. Of particular concern is what happens in the event of a lost or stolen mobile phone? (By definition, a mobile phone is one that can be lost.) If such a phone does not require a passcode, then all is lost since an attacker can use the phone to reset the user’s password via e-mail (which most phones expose as an “always on” service). Therefore a basic assumption is that mobile phones (which are owned and operated by end users of course) are passcoded.

Note that this deployment issue is not new. In a password-only environment, a laptop without a screen saver is equally as vulnerable. (Oh, you stopped worrying about that a long time ago? Yup, me too.)

Thus the security of mobile-based 2FA is reduced to user behavior on the client mobile device, and I don't have to tell you how difficult that’s going to be. One approach is to design an institutional BYOT program that rewards the user for doing the right thing. The institution reimburses each user a fixed, monthly stipend for the use of their mobile device in the workplace. In return, the user agrees (as a matter of policy) to passcode their phone and take appropriate steps if the phone is lost or stolen. In this way, all parties cooperate in an effort to make it more difficult for the bad guys to get the upper hand.

And so we see that mobile-based 2FA is not altogether free. All parties contribute their fair share to the effort. This will shift the attention of the bad guys away from phishing toward more focused attacks, but surely the value of a stolen password file will fall, and thus it will become more difficult for the bad guys to subvert our systems wholesale.

Is OAuth2 In Your Future?

By Tom Scavo, InCommon Operations Director

Whether it becomes a protocol or a framework, OAuth2 certainly deserves another look. In fact, I revisit the nascent worlds of OAuth2 and OpenID Connect often, regularly testing the waters and gauging the current state-of-the-art. At this point, AFAICT there’s really nothing to latch on to unless you’re a bleeding edge developer, researcher, or technology pundit.

People whose opinion I respect predict OAuth2 and friends have a very positive future indeed. Personally I think it’s too early to tell, but from the perspective of a federation operator, are there use cases that would benefit from OAuth2 now?

We are faced with at least one burning use case at the moment. That is, the use case of a low to moderate value federated webapp with very modest attribute requirements. This use case requires near 100% penetration yet should have near zero boarding requirements, that is, Level of Assurance (LoA) is minimal while the barriers to interoperability should be as close to zero as possible.

Relatively speaking, this is a very old use case. It has remained unsolved for so long, it now threatens to unravel the federated approach by marginalizing the hard won successes realized over years of deployment. Thus the opportunity for a young framework (like OAuth2) to step in and make significant inroads is very real. This is of course the way it should be, a kind of survival of the fittest. So let the user beware: OAuth2 may be in your future sooner than you think!

Let me outline the use case in slightly more detail so we know what we’re up against. A typical federated Service Provider (SP) has the following requirements:

- Roughly LoA-1, that is, a basic level of assurance with optional identity (if there is a claim of positive identity, the Identity Provider (IdP) should assert it)

- Globally unique, persistent, non-reassigned identifier

- One or more so-called personal identifiers (e-mail address, person name, and/or human-readable principal name)

- A discovery interface with an 80% success rate (minimum)

- No manual IdP boarding requirements

Expanding on the latter pair of requirements: Assume at least 80% of the users that visit the SP are presented with a discovery interface that includes one of their preferred IdPs, and moreover, the IdP selected by the user meets the assurance and attribute requirements without further human interaction. Remember, this must result in a positive user experience at least 80% of the time!

Today of course we are far from meeting the needs of this use case. SPs either manually board IdPs one-by-one, leading to a relatively small group of trusted IdPs, or SPs present the user with a broad selection of IdPs, few of which meet the designated assurance and attribute requirements. In either case, the SP realizes roughly a 20% success rate (at best). Not good.

Solutions anyone? Do OAuth2 and friends play a role here?